Chess Detection with YOLOv8

In this project, our team of five developed a robust system for chess piece detection utilizing advanced computer vision techniques. By integrating YOLOv8, a state-of-the-art object detection model, with edge detection algorithms, we aimed to accurately identify and classify chess pieces on a board in various configurations. This combination enhances detection precision, even in complex scenarios involving overlapping pieces or varying lighting conditions. The project demonstrates the potential of AI-driven solutions in analyzing and digitizing physical chess games, paving the way for applications in game analysis, training tools, and interactive gameplay systems.

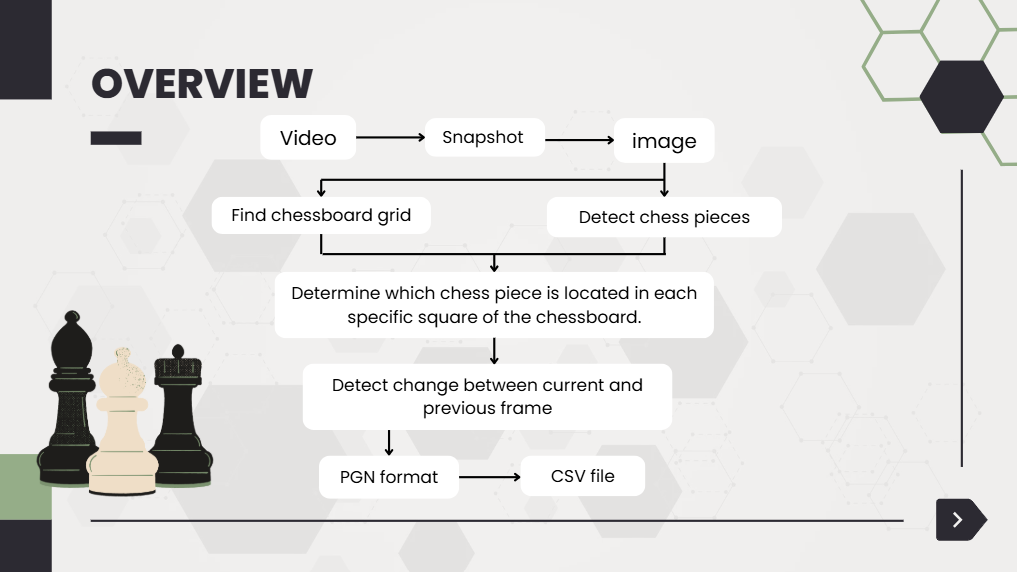

The system operates as follows:

1. Edge Detection: Initially, the edges of the chessboard are detected to determine its position within the frame.

2. Piece Detection: YOLOv8 identifies the chess pieces on the board.

3. Position Mapping: The system compares the pixel data of the board and the detected chess pieces, calculating and storing their possible positions.

4. Frame-by-Frame Analysis: Each video frame is analyzed to detect movements of the pieces.

5. FEN Generation: When a piece is detected to have moved, the system generates the corresponding FEN (Forsyth-Edwards Notation) text and saves it to a CSV file.

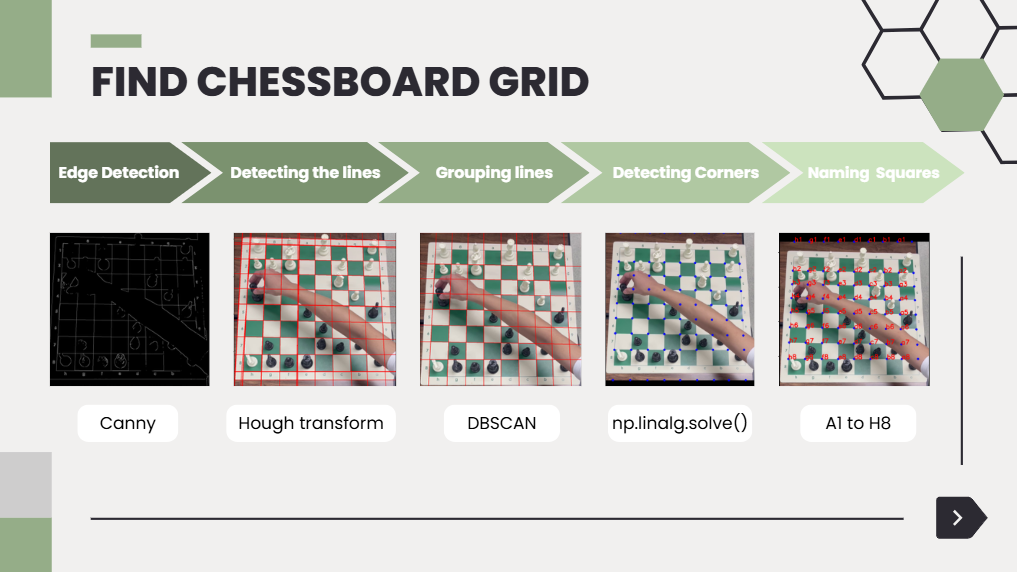

This is the process used to determine the position of the boxes on the chessboard.

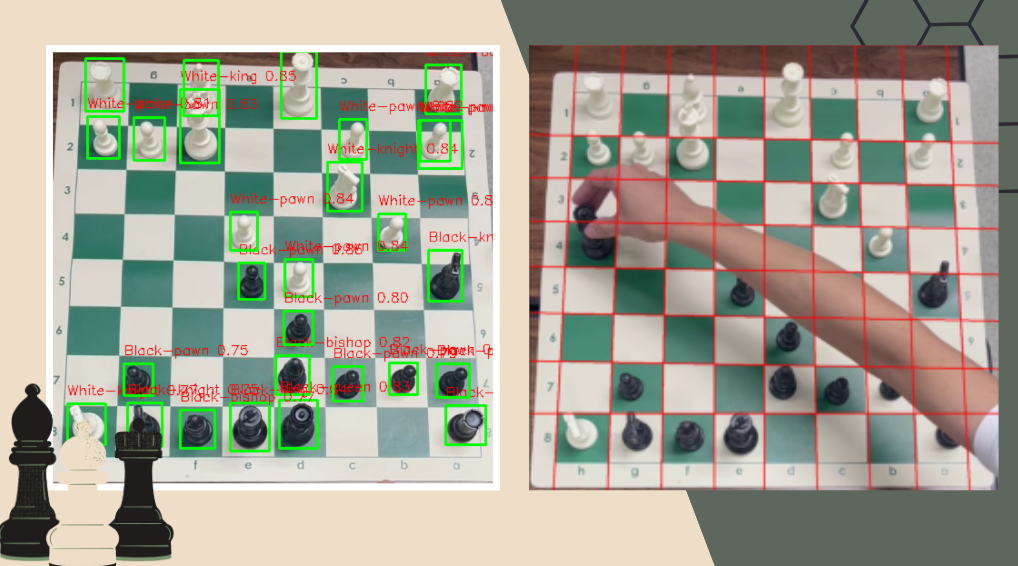

This is the result of detecting the type of chess on the board.

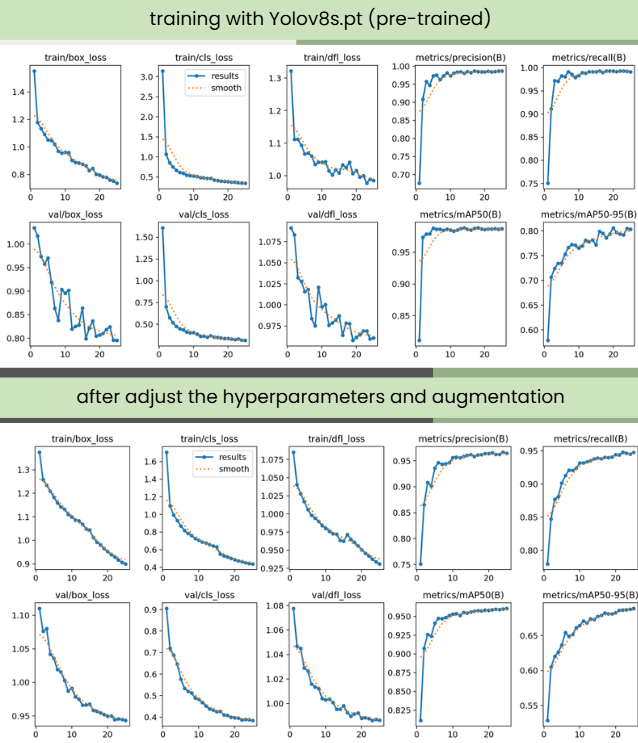

The model's performance improved significantly after hyperparameter tuning and data augmentation. Initially, with the pre-trained YOLOv8s model, the loss values for both training and validation were higher, and the metrics (precision, recall, mAP) showed moderate results. After adjustments, the loss values decreased consistently, and key metrics such as precision, recall, and mAP increased, reflecting better detection accuracy and reliability. These improvements indicate that the fine-tuned model is more effective in detecting and classifying chess pieces accurately across various frames. This optimized approach ensures accurate tracking of piece positions and movements for generating FEN outputs.